Progress Update

The Tech Accord signatory companies are sharing the progress they have made against the eight core commitments within the Accord between its signing in February 2024 and September 2024.

Progress by company

Adobe is committed to working together with industry and government to combat the deceptive use of AI in the 2024 elections. As part of our commitment to the Munich Tech Accord, Adobe has taken important steps to counter harmful AI content:

Adobe is a co-founder of the Content Authenticity Initiative (CAI) and co-founder and steering committee member of the standards organization, the Coalition for Content Provenance and Authenticity (C2PA), a Joint Development Foundation project within the Linux Foundation. We are committed to collaborating across CAI and C2PA members to ensure open technical standards for provenance are maintained to the highest standards; used to develop and implement content provenance across the digital ecosystem which is interoperable; and ultimately adopted by international standards organizations as the gold standard for helping to combat misinformation. As part of this work, the C2PA has a working group led by a civil society organization that is dedicated to threats and harms mitigation.

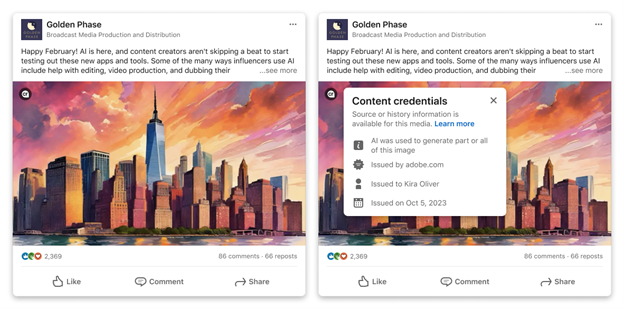

Adobe has also invested in the development of free, open-source technology called Content Credentials, which leverages the C2PA open technical standard and acts like a “nutrition label” for digital content. Anyone can use Content Credentials to show important information about how and when the content was created and edited, including whether AI was used. Adobe applies Content Credentials to images generated with Adobe Firefly, our family of creative generative AI models, to provide transparency around AI use. In addition, Adobe allows creators to apply Content Credentials to their work through other popular Adobe Creative Cloud applications including Adobe Photoshop and Adobe Lightroom.

Adobe is committed to advancing the mission of the CAI and C2PA. Since signing the Tech Accord, we have helped grow the CAI’s membership to more than 3,300 members globally. We also continue to promote and drive widespread adoption of Content Credentials, including releasing an open-source video player that will make Content Credentials visible on videos. As we approach the U.S. presidential election, Adobe recently worked with party conventions to drive awareness and adoption of Content Credentials, helping them add verifiable attribution to official campaign materials that were created during the conventions. And as part of our work to ensure the public understands how to leverage provenance tools such as Content Credentials, Adobe has supported the development of the CAI’s media literacy curriculum aimed at providing students with critical media and visual literacy skills to help them better navigate today’s AI-powered digital ecosystem.

Over the past seven months, we’ve continued to refine and execute on our comprehensive, proactive approach to safeguarding elections around the globe.

In May 2024, we updated our Usage Policy to more clearly prohibit the use of our products to interfere with the electoral process, generate false or misleading information regarding election laws and candidate information, or engage in political lobbying or campaigning. We’ve conducted rigorous Policy Vulnerability Testing before major elections globally, including in India, South Africa, Mexico, France, the United Kingdom, and the European Union, and published a detailed blog post on our testing methodology including a public set of quantitative evaluations in June 2024. Our testing has informed improvements to our safety systems and enables our Trust and Safety team to anticipate, detect, and mitigate harmful misuse of our models in election-related contexts.

We’ve helped spread awareness of safety interventions that can prevent generative AI from being misused by bad actors in global elections. We briefed European Commission staff on our election integrity research and interventions ahead of the June EU parliamentary elections, informed multiple state and federal US policymakers of our work, and have been in touch with US civil society organizations and global policymakers throughout this election cycle. Looking ahead, we’ll continue to deepen our collaboration with industry, civil society, and policymakers to prepare for the US election.

ElevenLabs remains steadfast in our commitment to the resolutions set forth in the Tech Accord to Combat Deceptive Use of AI in 2024 Elections, and to working with industry and government to safeguard the integrity of democratic processes around the globe.

Transparency, Provenance, and Deepfake Detection

Enabling clear identification of AI-generated content is one key aspect of ElevenLabs’ responsible development efforts. To promote transparency, we publicly released an AI Speech Classifier, which allows anyone to upload an audio sample for analysis as to whether the sample was generated with an ElevenLabs tool. In making our classifier publicly available, our goal is to prevent the spread of misinformation by allowing the source of audio content to be more easily assessed. We also are working with AI safety technology companies to improve their tools for identifying AI-generated content, including in election-related deepfakes. For example, we have partnered with Reality Defender, a cybersecurity company specializing in deepfake detection, to leverage our proprietary models and methods to improve the efficacy and robustness of their tools. This will enable Reality Defender’s clients, including governments and international enterprises, to detect and prevent AI-generated threats in real time, safeguarding millions from misinformation and sophisticated fraud. In addition, we believe that downstream AI detection tools, such as metadata, watermarks, and fingerprinting solutions, are essential. To that end, we continue to support the widespread adoption of industry standards for provenance as a member of the Coalition for Content Provenance and Authenticity (C2PA) and the Content Authenticity Initiative.

Additional Safeguards to Prevent the Abuse of AI in Elections

We have implemented various safeguards on our platform that are designed to prevent AI audio from being abused in the context of elections. We are continuously enhancing our abuse prevention, detection, and enforcement efforts, while actively testing new ways to counteract misuse. Under our Terms of Service and Prohibited Use Policy, we prohibit the impersonation of political candidates, and we are continuously expanding our automated tools that prevent the creation of voice clones that mimic major candidates and other political figures. We also prohibit the use of our tools for voter suppression, the disruption of electoral processes (including through the spread of misinformation), and political advertising. We continuously monitor for and remove content that violates this policy through a combination of automated and human review. Further, we are committed to ensuring that there are consequences for bad actors who misuse our products. Our voice cloning tools are only available to users who have verified their accounts with contact information and billing details. If a bad actor misuses our tools, our systems enable us to trace the content they generated back to the originating account. After identifying such accounts, Elevenlabs takes action as is appropriate based on the violation, which could include warnings, removal of voices, a ban on the account and, in appropriate cases, reporting to authorities.

Reporting Violations

We take misuse of our AI voices seriously and have implemented processes for users to report content that raises concerns. For example, we provide a webform through which users can identify their concerns and add any documentation that will help us address the issue. We endeavor to take prompt action when users raise concerns with us, which can and has resulted in permanently banning those who have violated our policies.

Outreach, Policymaking, and Collaboration

We are collaborating with governments, civil society organizations, and academic institutions in the US and UK to ensure the safe development and use of AI, including raising awareness around deepfakes. We are a member of the U.S. National Institute of Standards and Technology’s (NIST) AI Safety Institute Consortium, and have participated in work by the White House, the National Security Council, and the Office of the Director of National Intelligence regarding AI audio. We are also working with Congress on efforts to prevent AI from interfering with the democratic process, including supporting the bipartisan Protect Elections from Deceptive AI Act, led by Senators Amy Klobuchar, Josh Hawley, Chris Coons, and Susan Collins, which would ban the use of AI to generate materially deceptive content falsely depicting federal candidates in political ads to influence federal elections.

2024 is the largest global election year in history and it has challenged GitHub to consider what is at stake for developers and how we can take responsible action as a platform. Although GitHub is not a general purpose social media platform or an AI-powered media generating platform, we are a code collaboration platform where users may research and develop tools to generate or detect synthetic media. In line with our commitments as a signatory of the AI Elections Accord, in April 2024 GitHub updated our Acceptable Use Policies to address the development of synthetic and manipulated media tools for the creation of non-consensual intimate imagery (NCII) and disinformation, seeking to strike a balance between addressing misuses of synthetic media tools while enabling legitimate research on these technologies. Following the implementation of this policy, GitHub has actioned repositories for synthetic media tools that were designed for, encourage, or promote the creation of abusive synthetic media, including disinformation and NCII.

Google and YouTube’s Progress Against the Tech Accord to Combat Deceptive Use of AI in 2024 Elections

This year, more than 50 national elections — including the upcoming U.S. Presidential election — are taking place around the world. Supporting elections is a core element of Google’s responsibility to our users, and we are committed to doing our part to protect the integrity of democratic processes globally. Earlier this year, we were proud to be among the original signatories of the Tech Accord to Combat Deceptive Use of AI in 2024 Elections.

Google has long taken a principled and responsible approach to introducing Generative AI products. The Tech Accord is an extension of our commitment to developing AI technologies responsibly and safely, as well as of our work to promote election integrity around the world.

In line with our commitments in the Tech Accord, we have taken a number of steps across our products to reduce the risks that intentional, undisclosed, and deceptive AI-generated imagery, audio, or video (“Deceptive AI Election Content”) may pose to the integrity of electoral processes.

Addressing Commitments 1-4 (Developing technologies; Assessing models; Detecting distribution; and Addressing Deceptive AI Election Content)

Helping empower users to identify AI-generated content is critical to promoting trust in information, including around elections. Google developed Model Cards to promote transparency and a shared understanding of AI models. In addition, we have addressed harmful content through our AI Prohibited Use Policy, begun to proactively test systems using AI-Assisted Red Teaming, and restricted responses for election-related queries across many Generative AI consumer apps and experiences. We have also deeply invested in developing and implementing state-of-the-art capabilities to help our users identify AI-generated content. With respect to content provenance, we’ve expanded our SynthID watermarking toolkit to more Generative AI tools and to more forms of media, including text, audio, images, and video. We were also the first tech company to require election advertisers to prominently disclose when their ads include realistic synthetic content that’s been digitally altered or generated, and we recently added Google generated disclosures for some YouTube Election Ads formats. YouTube now also requires creators to disclose when they’ve uploaded meaningfully altered or synthetically generated content that seems realistic, and adds a label for disclosed content in the video’s description. For election-related content, YouTube also displays a more prominent label on the video player for added transparency. In addition, Google joined the C2PA coalition and standard as a steering committee member and is actively exploring ways to incorporate Content Credentials into our own products and services including Ads, Search, and YouTube.

Addressing Commitments 5-7 (Fostering cross-industry resilience; Providing transparency to the Public; and Engaging with Civil Society)

Our work also includes actively sharing our learnings and expertise with researchers and others in the industry. These efforts extend to increasing public awareness by, for example, actively publishing and updating our approach to AI, sharing research into provenance solutions, and setting out our approach to content labeling.

Artificial intelligence innovation raises complex questions that no one company can answer alone. We continue to engage and collaborate with a diverse set of partners including the Partnership on AI, ML Commons, and we are a founding member of the Frontier Model Forum, a consortium focused on sharing safety best practices and informing collective efforts to progress safety research. We also support the Global Fact Check Fund, as well as numerous civil society, research, and media literacy efforts to help build resilience and overall understanding around Generative AI. Our websites provide more details regarding our approach toward Generative AI content and the U.S. election, as well as the recent EU Parliamentary elections, general election in India, and recent elections in the U.K. and France.

We look forward to further continuing to engage with stakeholders and doing our part to advance the AI ecosystem.

Enhancing Transparency through ChatEXAONE

In August 2024, LG AI Research launched ChatEXAONE, an Enterprise AI Agent, based on the EXAONE 3.0 model. As a starting point, this service aims to improve the productivity of employees within the LG Group by addressing the accuracy and reliability of the content produced, which is an issue with existing generative AI. ChatEXAONE’s strength lies in providing references and evidence from reliable sources for the generated contents. In the election, AI-driven deception often thrives in the absence of verifiable sources. By consistently citing its sources and presenting evidence, ChatEXAONE not only improves the accuracy of the information but also supports the wider ecosystem in promoting trust and accountability. This critical feature aligns with the core commitments of the Tech Accord, which emphasizes the need for developing and implementing technology to mitigate risks related to Deceptive AI Election content.

Driving AI Literacy and Ethical AI Usage

While technological safeguards are essential, we recognizes that combating deceptive AI content requires more than just tools—it also requires informed users. To address this, LG AI Research has been investing in educational campaigns aimed at raising AI literacy. Through programs like the LG Discovery Lab and AI Aimers, offering practical education to younger generations about the ethical use of AI technologies. Moreover, LG’s collaboration with UNESCO to develop an AI ethics curriculum, set to be launched globally by 2026, underscores its long-term commitment to fostering responsible AI use. This initiative will play a pivotal role in equipping users worldwide with the knowledge to recognize and mitigate the risks associated with AI-driven misinformation, especially during elections. In line with the Tech Accord’s goals, these educational efforts ensure that the public understands both the benefits and the potential dangers of AI.

By prioritizing transparency through tools like ChatEXAONE and driving education around AI literacy, LG is actively contributing to the global effort to ensure AI is used ethically during elections.

Work undertaken since February 2024, illustrating progress made against the Code’s goals and commitments.

To further the Tech Accord’s goals, LinkedIn now labels content containing industry-leading “Content Credentials” technology developed by the Coalition for Content Provenance and Authenticity (“C2PA”), including AI-generated content containing C2PA metadata. Content Credentials on LinkedIn show as a “Cr” icon on images and videos that contain C2PA metadata. By clicking the icon, LinkedIn members can trace the origin of the AI-created media, including the source and history of the content, and whether it was created or edited by AI.

C2PA metadata helps keep digital information reliable, protect against unauthorized use, and create a transparent, secure digital environment for creators, publishers, and members. More information about LinkedIn’s work is in our C2PA Help Center article.

LinkedIn also prohibits the distribution of synthetic or manipulated media, such as doctored images or videos that distort real-life events and are likely to cause harm to the subject, other individuals or groups, or society at large. See Do not share false or misleading content. Specifically, LinkedIn prohibits photorealistic and/or audiorealistic media that depicts a person saying something they did not say or doing something they did not do without clearly disclosing the fake or altered nature of the material.

Election security is a critical issue that McAfee has supported globally for many years. McAfee has also been a leader in researching and protecting customers from the risks that AI technology can pose, through a combination of technology innovation and consumer education.

This August, with the upcoming U.S. election on the horizon, McAfee launched a new product, McAfee Deepfake Detector, aimed at helping consumers detect AI-generated content and other deepfake material – an initiative of extraordinary importance in combating misinformation during this critical time. McAfee Deepfake Detector works directly in users’ browsers on select AI PCs, alerting them when any video they are watching contains AI-generated audio content. McAfee began with audio because its threat research has found that most deepfake videos used AI-manipulated or AI-generated audio as the primary way to mislead viewers. McAfee is committed to expanding availability of this product to more platforms and further developing its technology to identify other forms of deepfake content, such as images. In addition, McAfee is exploring other avenues that sit at the intersection of content distribution and consumption to leverage its deepfake detection technology to help identify deceptive AI election content and other deepfake content.

Beyond its products, McAfee has also long been committed to educating consumers to help them stay safe online and avoid fraud, scams, and misinformation. To that end, McAfee recently launched the Smart AI Hub at mcafee.ai, which has resources and interactive elements to build awareness of deepfakes and AI-driven scams. McAfee’s education efforts also include blogs and social posts about deceptive AI election content and how to recognize it, including articles about specific instances of such content. In addition to its own publishing, McAfee also regularly engages with news outlets to provide expertise and help spread awareness on these issues.

Meta Investments in AI Transparency, Standards, and Public Engagement

Meta works across many channels to align with the goals of the AI Tech Accords, including public reporting, contributions to industry coalitions and standards organizations, releasing of open source tools, and a variety of mechanisms in the user-facing surfaces of our products and services. Our technical research teams continue to push the state of the art forward, open sourcing a new method for more durable watermarking of AI-generated images and audio detection, and releasing an update to our open LLM-powered content moderation tool LlamaGuard. We continue to fine tune our AI models and conduct extensive red teaming exercises with both internal and external experts. We have supported the Partnership on AI’s assembly of a Glossary of Synthetic Media Transparency Methods to improve fluency across industry, academia, and civil society on the range of methods available to support AI transparency. In September 2024 we joined the Steering Committee of the Coalition for Content Provenance and Authenticity (C2PA.org) to help guide the development of the C2PA provenance specification and other efforts to address the problem of deceptive online content through technical approaches to certifying source and history of media content.

Since January of 2024, we have required advertisers to disclose the use of AI in the creation of ads about social issues, elections, and politics. Advertisers are required to disclose when their ad contains a photorealistic image or video, or realistic sounding audio, that was digitally created or altered including by AI in certain cases. When an advertiser discloses this to us, Meta will add information on the ad and store it for seven years in the publicly-available Ad Library. If we determine that an advertiser doesn’t disclose as required, we will reject the ad. Repeated failure to disclose the use of AI may result in penalties against the advertiser.

Since its launch in 2023, we have added both a visible “Imagined with AI” label and invisible watermarking to photorealistic images created using our Meta AI Imagine feature. In May, we started labeling images from other image generators based on our detection of industry-shared signals, and gave people a tool to self-disclose that they’re uploading other AI-generated content so we can provide labels to our users. We require people to use this disclosure and label tool when they post organic content with a photorealistic video or realistic-sounding audio that was digitally created or altered. We now add an “AI info” label and share whether the content is labeled because of industry-shared signals, or because someone self-disclosed. Starting this week, for content that we detect was only modified or edited by AI tools, we are moving the “AI info” label to the post’s menu.

We continue to remove content that violates our Community Standards (whether created by AI or a person), including content related to elections. Additionally, when our robust network of third-party fact checking partners rates content as False or Altered, including through the use of AI, we move it lower in Feed and show additional information so people can decide what to read, trust, and share. We have kept ourselves accountable through public transparency about our AI systems’ responses to critical moments in this election cycle, and through constant adjustments to those systems when issues arise. We continue our work to identify and disrupt malicious actors attempting to interfere with global politics, and have included detailed analysis on their use of generative AI in our public reporting on their behavior.

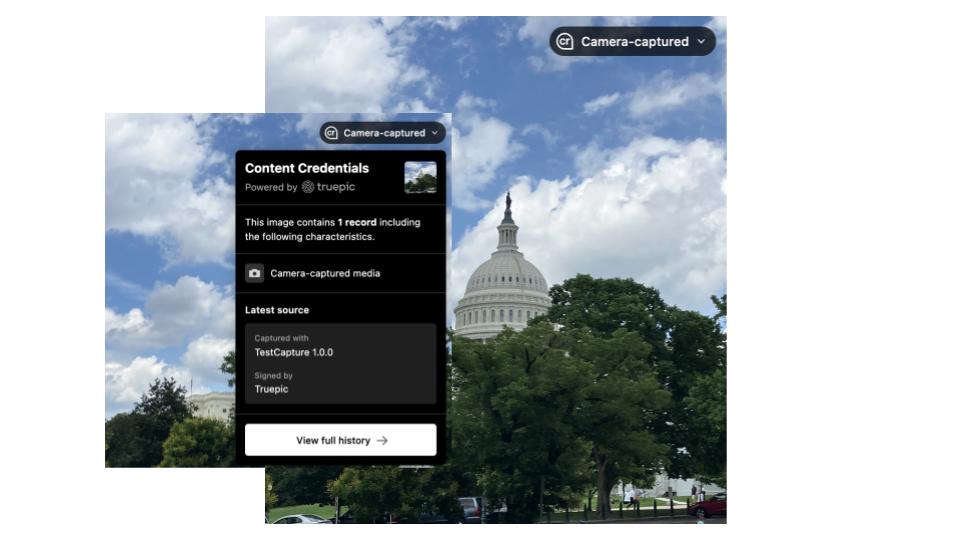

Since the adoption of the Tech Accord in February 2024, Microsoft has made significant strides to prevent the creation of deceptive AI targeting elections, enhance detection and response capabilities and improve transparency and public awareness. By incorporating content credentials, an open standard by the Coalition for Content Provenance and Authenticity (C2PA), across various platforms including Bing Image Creator and LinkedIn, the company is increasing the likelihood that AI-generated images and videos are marked and verifiable. We have also launched a pilot program to help political campaigns and news organizations apply these standards to their own authentic media. We also collaborated with fellow signatory TruePic to develop an app available to pilot participants.

To combat the dissemination of deceptive AI in elections, Microsoft’s AI for Good Lab has developed detection models trained on a vast dataset, enabling the identification of AI-generated or manipulated content. Additionally, Microsoft has partnered with True Media to provide governments, civil society, and journalists with access to free tools that help verify media authenticity. Microsoft has also created a reporting portal for candidates, campaigns, and election authorities to report possible deceptive AI targeting them and allows Microsoft to respond rapidly to these reports.

Further, the company has engaged in global efforts to educate and build resilience against deceptive AI. So far this year we have conducted more than 150 training sessions for political stakeholders in 23 countries, reaching more than 4,700 participants. Microsoft has also run a comprehensive public awareness campaign, “Check, Recheck, Vote” , to inform voters about potential AI risks to the election and how to find trusted, authoritative election information. Overall, our public awareness campaigns outside the United States have reached more than 350 million people, driving almost three million engagements worldwide. Our U.S. Public Awareness campaign has just begun and already has reached over six million people with over 30,000 engagements.

Finally, in May, we announced a series of societal resilience grants in partnership with fellow signatory OpenAI. Grants delivered from the partnership have equipped several organizations, including Older Adults Technology Services (OATS) from AARP, International Institute for Democracy and Electoral Assistance (International IDEA), C2PA, and Partnership on AI (PAI) to deliver AI education and trainings that illuminate the potential of AI while also teaching how to use AI safely and mitigate against the harms of deceptive AI-content.

For more information on steps Microsoft is taking to protect elections, read our blog post about the Tech Accord and one about Microsoft’s Election Protection Commitments.

In the last seven months, Nota has signed on dozens of tv stations, newspapers, radio stations and lifestyle magazines all using our C2PA compliant AI generation system and tagging structure to ensure responsible and transparent use of AI. As this technology evolves, Nota is committed to actively integrating the best AI data and transparency workflows and established practices. We believe that inclusive and intelligent technology guardrails are fundamental to the future of our civic work.

As reaffirmed when we signed the Tech Accord in February, OpenAI is focused on mitigating risks related to deceptive use of AI in elections by furthering our provenance work, enforcing our policies, and supporting whole-of-society resilience. As part of our efforts to contribute to authenticity standards, we joined the Coalition for Content Provenance and Authenticity (C2PA) steering committee in May 2024. Earlier this year we began adding C2PA metadata to all images created and edited by DALL·E 3, our latest image model, in ChatGPT and the OpenAI API. We are also investing in provenance technology for non-public models that are currently limited to small-scale research previews: we will be integrating C2PA metadata for Sora, our text-to-video model, and have incorporated watermarks into Voice Engine, our model for creating custom voices. OpenAI has continued to invest in advancing innovations in provenance, including by sharing an image detection classifier for DALL·E 3 with partners – such as research labs and research-oriented journalism nonprofits – to understand its usefulness. We regularly publish research to foster transparency and promote open dialogue on these important issues.

To prevent, detect, and address deceptive content, our usage policies prohibit the use of our tools for political campaigning, impersonating officials, or misrepresenting voting processes. We have guardrails in place, including training our systems to reject requests to generate images of real people. We have disrupted several covert influence operations, and have shared threat intelligence with government, civil society, and industry stakeholders, and published our findings to amplify our efforts to address these challenges.

To foster public awareness and whole-of-society resilience, we have worked with various bodies – for example, the National Association of Secretaries of State (NASS) in the US and the European Parliament in the EU – to direct users to official websites when they ask ChatGPT about voter registration or polling locations. To drive adoption and understanding of provenance standards, we joined Microsoft in launching a societal resilience fund. This $2 million fund will support AI education and understanding, including through organizations like Older Adults Technology Services from AARP, International IDEA, and Partnership on AI. Finally, we are proud to have endorsed a number of federal and states bipartisan bills that further work on provenance, protect the voice and visual likeness of creators, and ban the distribution of deceptive AI-generated audio, images, or video relating to federal candidates in political advertising, including the Protect Elections from Deceptive AI Act, No FAKES Act and the California Digital Content Provenance Standards.

Since February, we have continued investing to combat deceptive AI and protect election integrity on TikTok for our communities around the world, including in the EU, UK, Mexico, and the upcoming US election.

As we continue investing in AI detection and labeling efforts, in May we were proud to be the first video sharing or social media platform to begin implementing the Coalition for Content Provenance and Authenticity (“C2PA”)’s Content Credentials technology. This means we can read Content Credentials in order to recognize and label AIGC on images and videos, and we’ve started attaching Content Credentials to TikTok content to help anyone using C2PA’s Verify tool identify AIGC that was made on TikTok. We automatically disclose TikTok AI effects, and have offered a tool for people to voluntarily label their AI-generated content since last year, which has been used by more than 37 million creators globally so far.

We engage with a range of independent experts on an ongoing basis and have further tightened relevant policies on harmful misinformation, foreign influence attempts, hate speech, and misleading AI-generated content based on some of their input. This includes strengthening our AIGC policies to prohibit misleading AIGC that falsely shows a public figure being degraded, harassed, or politically endorsed or condemned by an individual or group, as well as AIGC that falsely shows a crisis event or is made to seem as if it comes from an authoritative source, such as a reputable news organization. We’ve maintained policies against misleading manipulated media for years, and since last year we have required creators to label AIGC that contains realistic scenes and prohibited AIGC that falsely depicts public officials making endorsements.

We partner with experts to evolve our approach, share our progress, and help educate our community. We launched a new Harmful Misinformation Guide in our Safety Center to help our community navigate online misinformation, developed new media literacy resources with guidance from experts including MediaWise and WITNESS, and are teaming up with peers to support a new Al Literacy initiative from the National Association for Media Literacy Education. To help us regularly tap into insights from outside of TikTok as the US election approaches, we formed a US Elections Integrity Advisory Group comprised of experts in AI, elections and civic integrity, hate, violent extremism, and voter protection issues whom we meet with regularly. We’re continuing to share progress on our US election efforts—including our removal of AI-generated content that violates our policies—in a new US Election Integrity Hub in our Transparency Center, and continue to share data on our wider content moderation efforts in our Community Guidelines Enforcement reports.

Truepic is committed to strengthening transparency in global elections, especially following its signing of the AI Election Accord earlier this year. Truepic has partnered with various organizations to deploy digital content transparency technology that promotes authenticity in the global election process. Truepic and Microsoft are working together to provide Content Integrity tools across global elections, aiming to advance transparency and authenticity across various aspects of the election process and for its key stakeholders. By integrating Truepic’s authenticating mobile camera SDK into Microsoft’s Content Integrity suite, campaigns, newsrooms, and institutions can securely capture verified media with embedded Coalition for Content Provenance and Authenticity (C2PA) Content Credentials. This process ensures that content authenticity can be immediately verified, helping to combat misleading information and preventing bad actors from falsely claiming that genuine content is fake.

Truepic also continues its long running partnership with Ballotpedia to reach new milestones in the past few months. Truepic’s technology has helped Ballotpedia verify the identities of over 8,000 U.S. political candidates, reducing the risks of impersonation and AI-generated misinformation, and enhancing the credibility of candidate information.

Additionally, Truepic supported The Carter Center’s monitoring team during the elections in Venezuela last month, providing the Center’s monitoring team with secure technology to capture and verify authentic digital media throughout the country with Content Credentials. This marks the first time content provenance was deployed by the Carter Center and highlights the importance of maintaining transparency in challenging and potentially undemocratic elections.

Press Inquiries

For further information or to schedule an interview, please direct all press inquiries to our dedicated media relations team.